Students Francisco Ribeiro Mansilha and Lea Banovac use AI to detect brain tumors

11/12/2024 - 14:04

- Stories

- Student work

Could you share a bit about what inspired you to create ‘BrainScan AI’?

Francisco: ‘One of our favourite areas in data science is computer vision, and both of us are very interested in healthcare. When I found a dataset with MRI images labeled by medical professionals for brain tumours, I was immediately intrigued. I spoke to Lea about the potential of using AI to make a meaningful impact, and from there, our idea started taking shape. We both wanted to build something practical that could potentially help in the medical field.’

Lea: ‘We thought this would be a great opportunity to combine our interests in computer vision and healthcare. Plus, it is a project we can showcase as a strong portfolio piece when applying for work placements next semester.’

What were the main phases of this project?

Lea: ‘We structured the project in three main phases. The first phase was to understand the data and determine the best model to use. This meant analysing the MRI images and figuring out how to approach tumour detection with a reliable AI model.’

Francisco: ‘Once we had a solid understanding, we moved to the second phase, where we trained the model. We refined it using our dataset and implemented the explainable AI feature to help visualise the model's predictions. This step was crucial for transparency, especially for use in a medical context.’

Lea: ‘The third phase involved converting our trained models into a web app. We wanted to make it accessible to non-programmers, like doctors and medical researchers, so we focused on creating an intuitive interface.’

Tell us more about how the AI model works

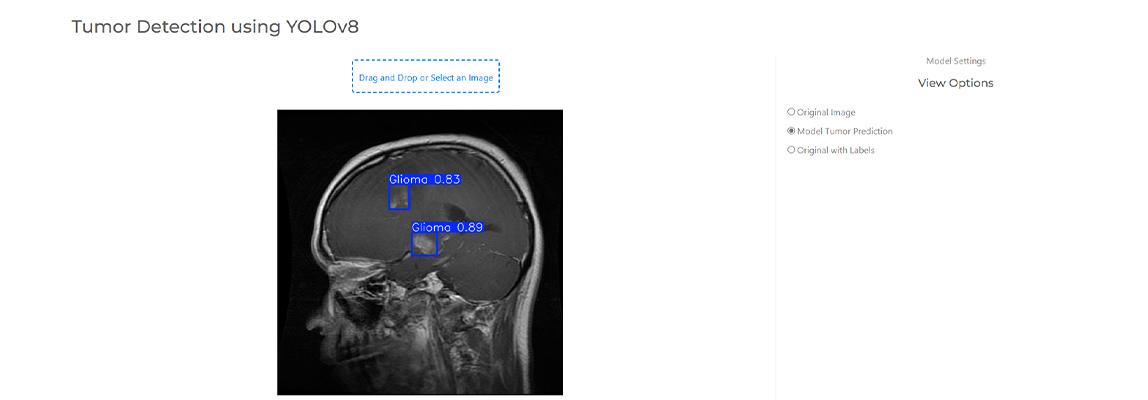

Francisco: 'We used a model based on the YOLO (You Only Look Once) algorithm, fine-tuned to detect brain tumors. We used it to analyse MRI images. The dataset consists of 5249 images, labeled with four tumor classes: Glioma, Meningioma, No Tumor, and Pituitary. The algorithm looks at each section of an image, checks for a tumour, and makes predictions based on what it has learned from labelled data. Once trained, the model can evaluate new images and identify tumours, providing the class, the location, and a confidence score.’

‘We trained our model extensively using MRI images from various perspectives, like the side, top, and front views of the brain. It was crucial to have a diverse dataset and to reserve some images for testing to evaluate our model’s performance objectively.’

Lea: ‘Francisco focused heavily on the model selection and training. On top of that, he built the web app himself. A skill that is only lightly covered in our studies, so it took a lot of extra self-study to pull it off.’

What role did explainable AI play in your project?

Lea: ‘Explainable AI was my primary focus. It is essential for us to be able to explain how the model makes decisions, especially in the medical field. I implemented an explainable AI algorithm that highlights the regions of the image that influenced the model’s prediction. This transparency allows doctors to understand why the model flagged certain areas as tumorous.’

Francisco: ‘Without explainable AI, you can’t build trust with medical professionals. It’s about proving that the model works and showing exactly how it works.’

What features does your web app offer?

Francisco: ‘The web app is divided into three main sections. The home page gives an overview of the project and provides links to our test dataset. The second section, Image Analysis, includes tools for adjusting contrast, brightness, and rotating the images. The third part is the Tumour Detection feature, where users can upload an MRI scan from the test set. The model then predicts the presence of a tumour, provides a confidence score, and offers the option to download the image with the prediction overlay.’

What challenges did you face during this project?

Francisco: ‘One of the biggest challenges was obtaining more comprehensive datasets. We had to work with what was available. For our model to be used in a real-world setting, we would need a much more diverse set of images and labels.’

How do you envision BrainScan AI being used in the future?

Lea: ‘Ideally, we would love to collaborate with a medical company or hospital. With their resources and more extensive datasets, we could significantly improve the model's accuracy and identify more types of tumours. Right now, it can only detect three tumour classes or nothing at all. There is so much room to expand this project into something genuinely useful for medical research.’

Francisco: ‘Yes, our web app is a solid proof of concept. If developed further, it could be a valuable tool for doctors and researchers who aren’t programmers but want to explore AI-driven tumour detection.’

How did your studies at BUas prepare you for this project?

Lea: ‘Our computer vision studies in Block B of our second year provided a solid foundation. We learned the fundamentals and worked on projects like segmenting and measuring plant roots, which taught us a lot. This project gave us the chance to dive into other areas of computer vision, like object detection.’

Francisco: ‘And having that hands-on experience with explainable AI was so valuable. In computer vision, being able to visualise and understand what’s happening under the hood of the model makes a huge difference.’

What’s next for you both?

Lea: ‘We are currently in the third year of our study, in our specialisation phase, focusing on projects that involve image classification. Next semester, we will be starting our internships, where we hope to apply our computer vision and AI knowledge in a professional setting.’

Francisco: ‘We are confident this project will stand out in our portfolios. It’s not just about classification, it’s also about detecting tumour locations and deploying a functional, user-friendly app. We have learned a lot, and we are excited to keep building on these skills.’

‘If you are interested in learning more or exploring our code, you can check out our GitHub repository and connect with us on LinkedIn. We hope to continue working on impactful projects that combine AI and healthcare!’

LinkedIn Francisco: https://www.linkedin.com/in/francisco-mansilha/

LinkedIn Lea: https://www.linkedin.com/in/lea-banovac-29191a24b/